What is a Docker and how to install it?

- October 3, 2017

- 0

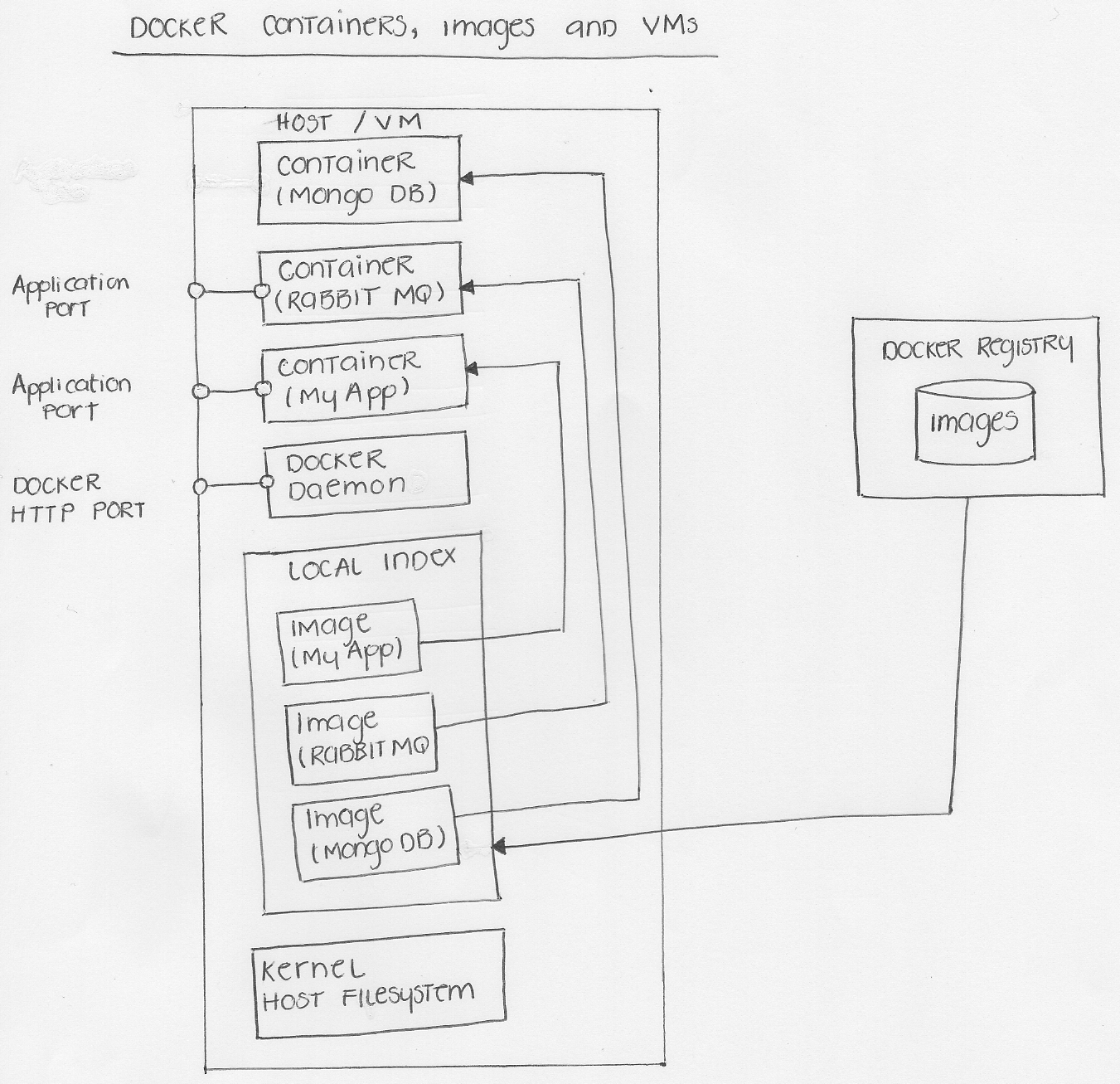

A Docker is a container tool which allows a user to create, deploy and run applications. The Docker’s containers use shared operating systems. This means that the Dockers are much more efficient than hypervisors in terms of the system resource, such as VM hardware. These containers help a developer to make the images/packages of their application with all the required parts it needs. A container allows the developer to make libraries and other dependencies as a single package and treats it as one with all the things it needs. The Docker repository is same as GitHub, except the fact that the application being already built.

Now, these images/packages have everything which is required to run a piece of software, including the code, libraries, dependency, runtime, etc. A container is a runtime instance of an image and these containers contain the application in a way that keeps them isolated from the host system that they run on. These containers don’t need the entire OS, but only the individual components in order to run. This leads to an optimum use of the server space and boosts the maximum performance of each application.

How to install a Docker?

Before starting with the Docker, we have to keep in mind that the Docker Containers keep all the directories that help the deployment of the scripts that need to run on an individual operating system. Now let us see an example on how to install a docker as well as how to use its images.

Here we are using CentOS 7 to install the Docker. The Binaries of the Docker have incorporated into RHEL/CentOS 7 extra repositories. The installation process is very easy. Simply follow these steps:

# yum update -y // update current system

# yum install docker // docker installation

# systemctl start docker // start docker

# systemctl status docker // check status

# systemctl enable docker

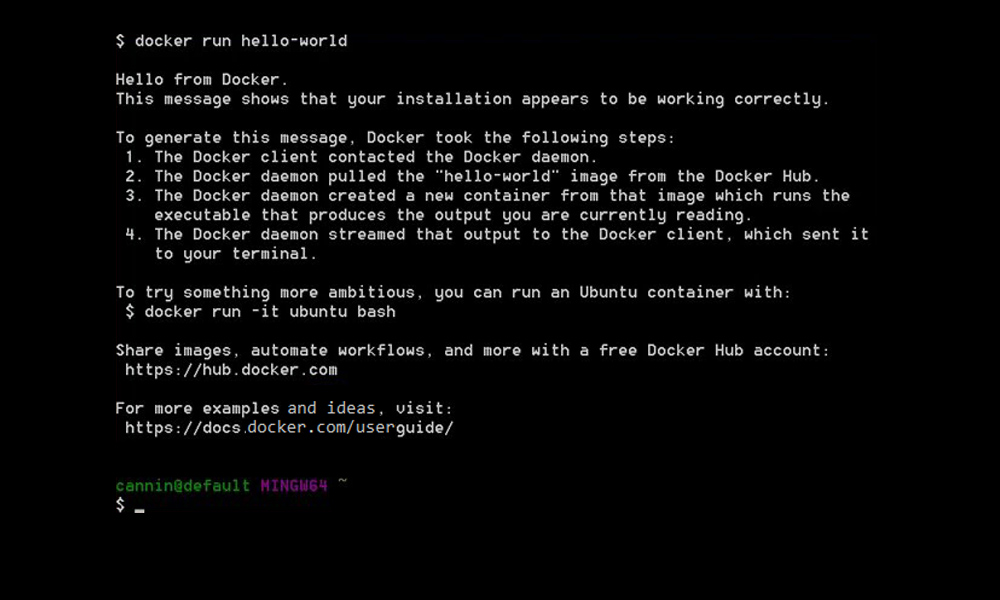

# docker run hello-world // verifying if the Docker is installed properly or not. If successful, the message, <”Hello from Docker. This message shows that your installation appears to be working correctly.”> would appear.

# docker info // system wise basic info of docker

# docker version // for docker version

# docker search ubuntu // you can search any image for your specific task

# docker pull ubuntu // if you want to use an image just download it locally

Optimizing Performance

The Docker permits various applications to use the same Linux kernel as the system that they’re running on and the application required to be pushed into the repository. The Docker handles the deployment and processes the task by itself, and we make the application as an image and upload it as a Container so that it reduces the size of that application. This gives it a maximum performance-boost and a better response time, unlike a virtual machine, which creates a whole of the virtual OS. It enables to move workloads of IT operations across the different cloud services, physical or virtual machines without locking them into a specific infrastructure tooling. That’s how the Docker helps the enterprises to optimize their infrastructure.

We can also start or stop a particular set of images to optimize the system process. We can start or stop the Docker images with simple commands like:

# docker start <docker image id>

# docker stop <docker image id>

We can monitor our Docker tasks using the following command:

# docker ps

Reduce the Deployment time with the Docker

A Docker is a tool that is developed for the benefit of both – the Developer(s) and the System Administrator(s), making it as a part of many DevOps toolchains. The developers seek the Docker to avoid “work on my machine” issues when collaborating on code with their coworkers. Now the Docker runs application in the isolated containers on a shared OS, so the deployment time gets reduced because the developers have to make, and build their application in their system and upload/push into a repository like GitHub; the System Administrators just need to pull that Container from the repository, regardless of the environment it created and will easily be deployed. Also, it reduces the System Administrators’ headache to deploy the application in a mass and on multiple servers.

For example, there is an e-commerce website, such as eBay that just wants to deploy their season sale page into their virtual host for 2 days. Now if the System Administrator has a container of a particular instance, then they can deploy it within a second, without interfering with other tasks.

Command to build an image

# docker build -t <container name/code>

Command to push it as a container

# docker push <container name/code> // assuming you already used “docker login” command if you have an account in the Docker repository

Command to pull it on the server side

# docker run <container name/code> // assuming that you already logged in using the “docker login” production server, else by default it uses its own repository

Docker and the Security

Security by any means is a crucial thing to remember when it comes to handling a large number of data. One cannot rely totally on the servers and containers on this matter. Those, who are the developers, know how to take security measures by writing the codes in a way that ensure that the Docker Containers are secure. One needs to have a practical knowledge and should be well versed in executing the commands in real-time. With time and years of experience, expertise can be achieved.