Server Latency

- March 13, 2020

- 0

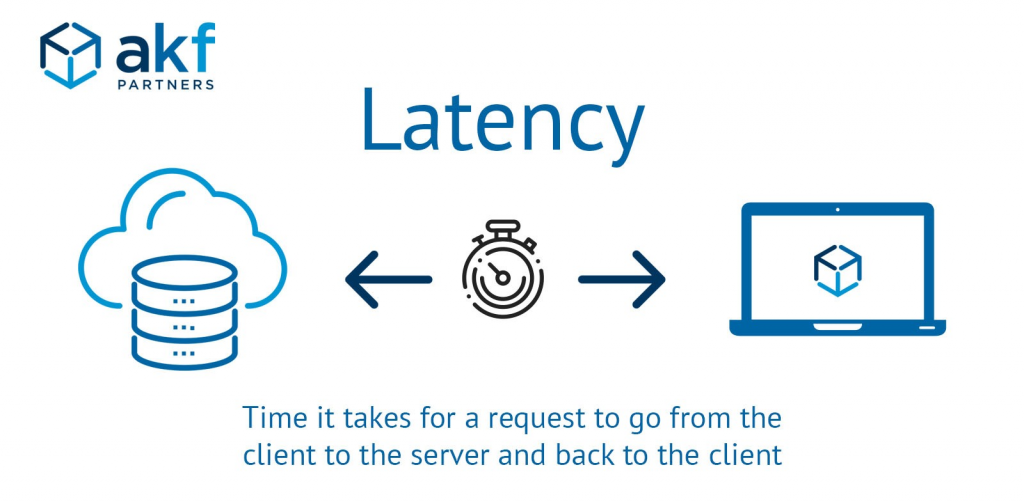

Before going for a combined definition, individually knowing the serve and latency brings more clarity.

To start with, let us deal with the server:- it is a system or device which bestows data to other computers over networks especially clients. They are avid in nature and accomplish their tasks well besides giving lesser importance for non-server tasks. It provides data to systems on both wide area networks (WAN) and local area networks (LAN).

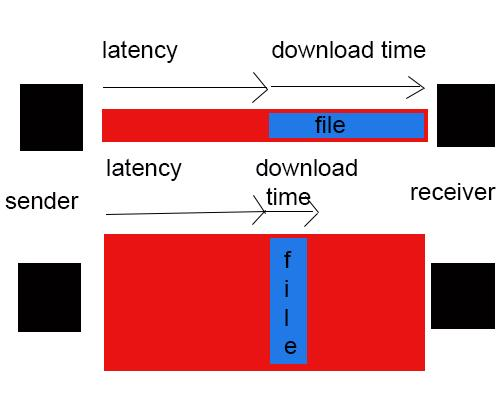

While coming to latency it is meant to be an ‘existing one’ but not yet developed so when gets ally with computers, it can be defined as the ‘happening delay’ during the data transfer. Literally, it is the time taken by data bits to travel from sender to receiver.

Depending upon the briefed definitions, let us lead off to server latency. Here the sender becomes you and the receiver is server. Thus the time it takes from you to send a message to the server is referred to as ‘LATENCY’.

Image Source: https://akfpartners.com/growth-blog

Often bandwidth is mistaken for the lower internet speed but the fact falls on latency. For better speed and less congestion, the responsibilities are shared by both latency and bandwidth. The bandwidth refers to

the storage area and latency for data speed

But one will be affected by the other.

Tactics to reduce server latency

When considering the performance of a system, the time delay matters and found to be critical if it is high. This indirectly makes the system vulnerable. Below tactics can able to reduce this latency to some extent.

To improve web resources faster, server latency should be reduced thus it will increase quality time on the system.

There are various techniques used to reduce server latency. Few are given below:-

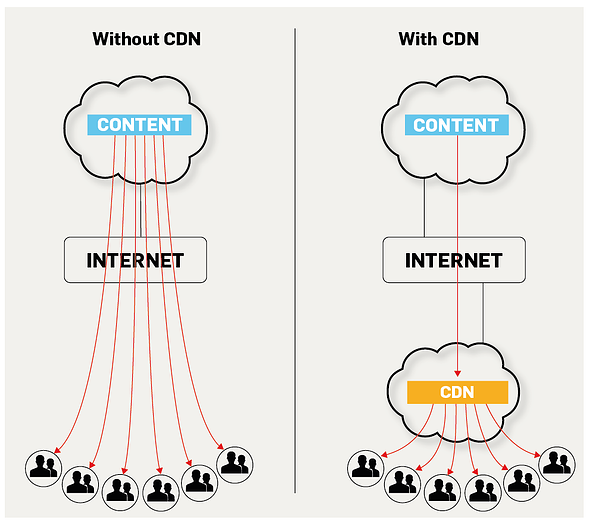

While using a content delivery network, the resources have been brought closer to the users by caching them in multiple places. This, in turn, helps the user to send the request to a very close point rather than going to an origin server.

Reducing the HTTP requests count as the unwanted requests make the system and connection slower. This may increase server latency.

Using well-established HttP/2 makes the server latency low. It minimizes the round trip counts between the client and the server.

Browser caching is another method to improve latency by reducing requests count from the user. A temporary location in your system to exhibit the websites.

GOOD LATENCY

‘Low latency is considered to be a Good latency’

As mentioned earlier, the lesser the time a data take for its round trip should be lower to the extent and there happens a good latency. The latency level is measured in milliseconds.

For most purposes, an average speed lower than(ie faster than) 50ms are found to be good and speed higher than(ie slower than) 100ms can cause setbacks. This is been tested with the help of ping.10ms ping is perfect but 40ms-60 ms is considered to be a mark or lower.when your latency level is low, the low number shows the level which fastest ping traveled. For example, while playing online games bandwidth or high download speed does not matter but latency becomes vital as these type of games demands only a very small amount of data which is being traded between servers and computers.

WHY IS MY LATENCY HIGH

High latency means high ping and this is been often caused by most common factors briefed below:-

⌁ Due to thronged routers directed towards the target system.

⌁ Due to deficient or low graded bandwidth

⌁ Due to heavy internet congestion.

We need a direct and perfect resolution to overcome the above factors. High latency can never be rectified by the user so contacting the internet service providers may help the situation. So a consistent latency is impossible.

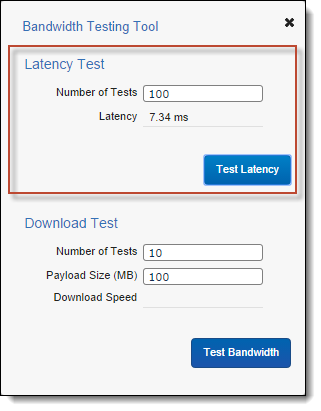

SERVER LATENCY TEST

Methods to measure server latency are as follows:-

WoW Test displays online and offline server-status.

DNS Test converts the domain to the IP address of the site.

Web Test

Fortnite test finds out the ping between the device and the server.

The azure test is specialized in internal monitoring and inter-region latency is possible through Azure.

SQL, AWS, Server ping latency tests are the rest of the methods in server latency.

Image Source : https://help.relativity.com/

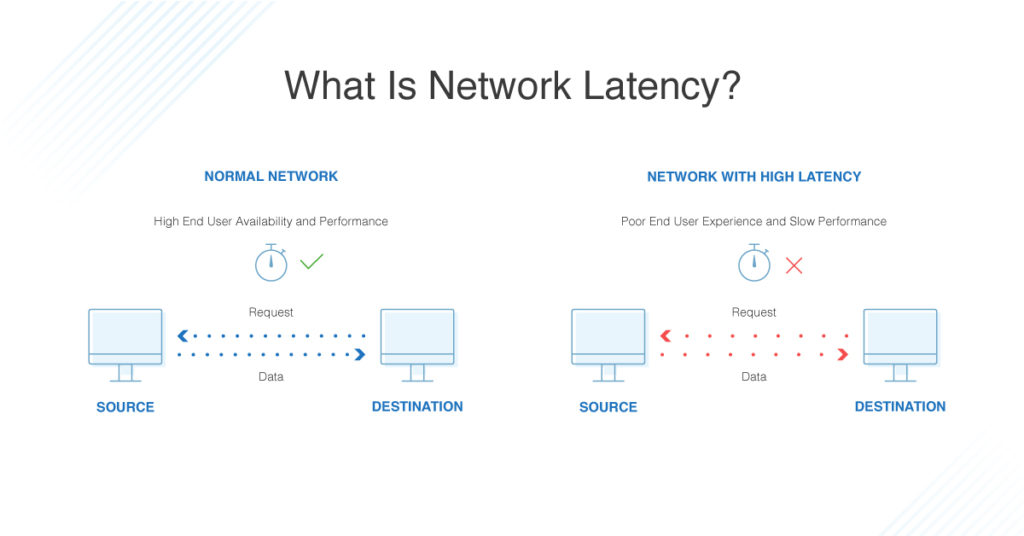

LATENCY IN NETWORKING

This is one of the latency types. While socializing over networks, the delay occurring during data communication is network latency. Happening slow delays are low latency networks in reverse longer delays are high latency networks. Usually, higher network latency creates hurdles.

Common high latency indications include:

§ Improper usage of software or software failure may bring out high latency.

§ The travel time spent by a packet to reach the destination from the source.

§ Errors occurring in switches or routers may affect the travel time as it has to

be checked and the packet header will be kept changing.

§ The setbacks occurring within the transmission.

Image Source: https://www.dnsstuff.com/network-latency

NETWORK LATENCY TEST

Ping Test and Trace route test are the main testing tools for network latency.

Ping test allows us to enter the site id and will check the ping count as well. While considering cellular, the latency level can be between 200-600ms. For dial-up connections, 100-220ms is considered to be normal and if it is a digital subscriber line(DSL),10-70ms is a normal latency rate and for a cable modem connection, the lowest latency level of 5-40ms will be the normal rate. Ping test also offers to estimate a remote server’s distance ie travel time of data that will be added along to the latency rate. This will be calculated as 1ms in latency for every 100 kms(60 miles).

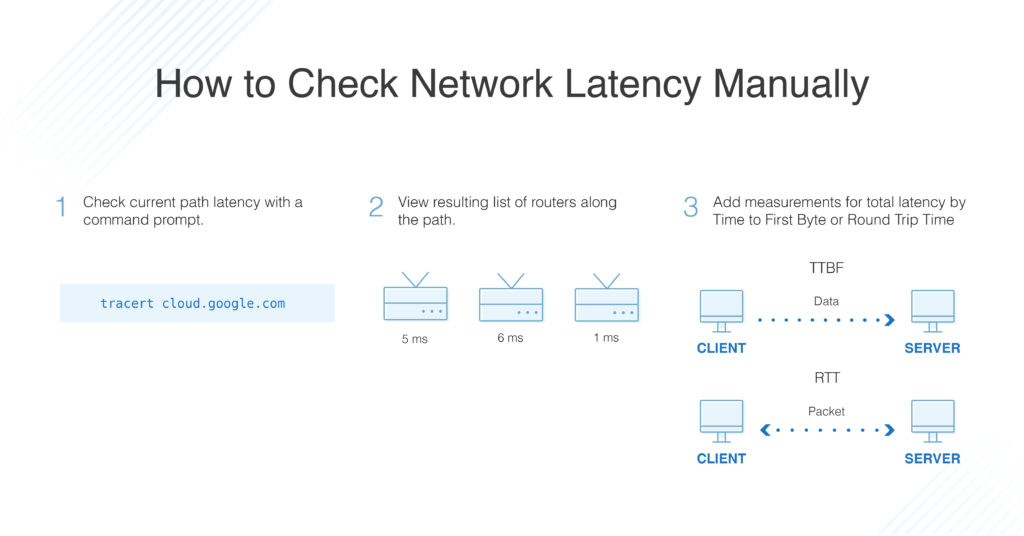

The trace route test will show the traveled data path and delay time as well. In particular, the source of network delays can find out with the assistance of this tool. These tests can be done using network utility applications in MAC os.

For windows, the command prompt is helpful for attempting a network latency test. Another one is the ping loopback test, it will verify the hardware problems behind the network latency.

Latency can also be measured using two essential methods ie TTFB(Time to First Byte), it will measure the volume of time between the client request sent to the server and the moment the first byte has been received.

Secondly, we can apply the RTT(Round trip time)method to measure the volume of time taken for the request along with the reply passed between the client and the server.

Apart from above, a tool named MTR(my traceroute)-combination of traceroute and ping has been found as an essential tool for latency tests in detail. Primarily called Matt’s traceroute.

Image Source : https://www.dnsstuff.com/network-latency

Debugging – NETWORK LATENCY ISSUES

To reduce the latency level initially, the lag should be fixed thus increasing the downloading speed.

The below can help to keep up a low latency level to some extent:-

Once the occurrence of the latency is confirmed, the first step is to find out the point where it is getting generated and the final step in the process will be identifying and dismantling the source of latency.

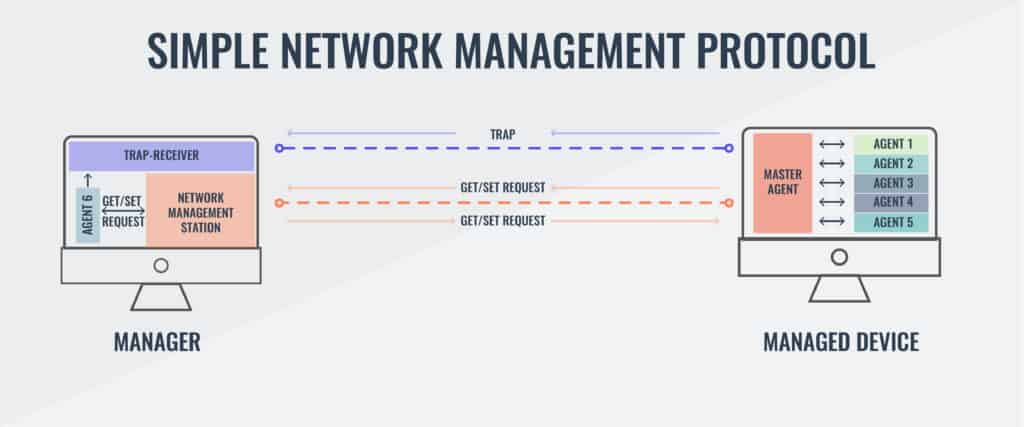

Apart from the universal tools like ping and traceroute, there are some other tools that exist to troubleshoot latency issues such as Protocol analyzer tool, SNMP(simple network management protocol) monitoring tool, Netflow analyzer tools etc……

Image Source : https://www.comparitech.com/

SNMP, it is a two in one tool where they can execute network monitoring and can trace down the network hurdles as well. Inturn these hurdles could be the source of latency. In addition, they are also used to track down the hardware faults.

Netflow analyzer tools are used to find out the data sent across a network.

When the rest of the tools have failed in determining the cause of latency, the protocol analyzer tool such as Wireshark is been used as it can perform a deep inspection of a packet’s path.

The application performance monitoring tools have been used to unveil the latency issues with deep inspection into the application.

How to improve Latency

Improve latency, in turn, it is reducing latency level, so here we will try out some ways to reduce the level of latency:-

Uninstall the least prior firewalls to avoid network congestion as it utilizes more system time to trace down the incoming and outgoing network traffic.

Avoiding fallacious network hardware will help to improve latency and using an ethernet cable to the modem, this can be done. Further, it will help to find out the faulty hardware.

The use of ethernet cable results not only improves the latency but will reduce the cost as well. The wired connections are always safe and save the amount of time as well.

For better performance of networks, avoid clubbing more connections as it will slow down the process and reduce the latency level.

Restart the network hardware periodically to avoid the system slow down.

As a Conclusion, server latency can be rectified and kept low with the assistance of the above-mentioned ways.