10 Methods to Prevent Web Server Overload

- January 19, 2018

- 0

The web server overload is quite a common issue regardless of an organization’s experience in handling the server side matters. Even the popular sites like Facebook and YouTube have faced server crashes. Therefore, there is no wonder that you are struggling to deal with it, especially if you are new to this. Years of research and a number of case studies of the real-time scenario have come up with lots of points that include the causes of web server overload, signs, and finally, the preventive measures to control the future prospects.

Causes of Web Server Overload

At any time web servers can be overloaded due to the following reasons, such as mentioned under:

1. Web server’s incomplete availability – This can happen because of expected or necessary support or update, hardware or software crashes, back-end malfunctions, etc. In these circumstances, rest of the web servers get too much traffic and grow overloaded.

2. Surplus local web traffic – Numerous clients which are connecting to the website within a brief interval may cause a web server overload. For instance, have you ever noticed that your university website hadn’t loaded at all when your semester result had arrived? This causes sudden hike which is not for longer duration, either!

3. Computer worms and XSS viruses – These will cause irregular traffic due to millions of infected computers, browsers or web servers. Once these are released the network will significantly reduce its traffic.

4. Denial of Service/Distributed Denial of Service (DoS/DDoS) attacks – A denial-of-service attack or distributed denial-of-service attack is an effort to make a computer or network device unavailable to its proposed users. If one system is found vulnerable, it is targeted by multiple other systems. These other systems are too controlled by the hacker(s) who somehow breach(es) into the system security by guessing the right password. Overall, the network gets flooded by packets, that ultimately crashes the server leading it to deny the real requests.

5. Network slowdowns – So that client requests are completed more slowly and the number of connections increases so much that server limits are approached.

Signs of web server overload

1. If overload results in a delayed serving of requests from 1 second to a few hundred seconds.

2. If the web server returns an HTTP error code, such as 500, 502, 503, 504, 408, etc. which are inappropriate overload conditions.

3. The web server denies or resets TCP connections before it returns any content.

4. Sometimes the server delivers only a part of the requested content. This can be studied as a bug, even if it normally occurs as a symptom of overload.

How to prevent web server overload?

To partly master above average load limits and to prevent overload, several big websites practice standard techniques, as mentioned below:

1. By controlling network traffic, using Firewalls to block undesired traffic coming from poor IP sources, or having inadequate patterns. HTTP traffic managers can be placed to drop, redirect, or rewrite requests which have poor HTTP patterns. To smooth the peaks in the network usage bandwidth management and traffic shaping can be done.

2. By expanding web cache methods. The cache saves a lot of time. Instead of literally asking for the content from the literal server, that basically may reside far away from the client’s native place, if a number of cache contents are made available, it would reduce the time to a great extent.

3. By implementing different domain names to subserve different contents by separating the web servers.

4. Employing different domain names or computers to separate big files from small and medium-sized files. The idea is to be able to fully cache small and medium-sized files and sufficiently serve big or huge files by using different settings.

5. By using many internet servers or programs per computer, each one should be connected to its own network card and IP address.

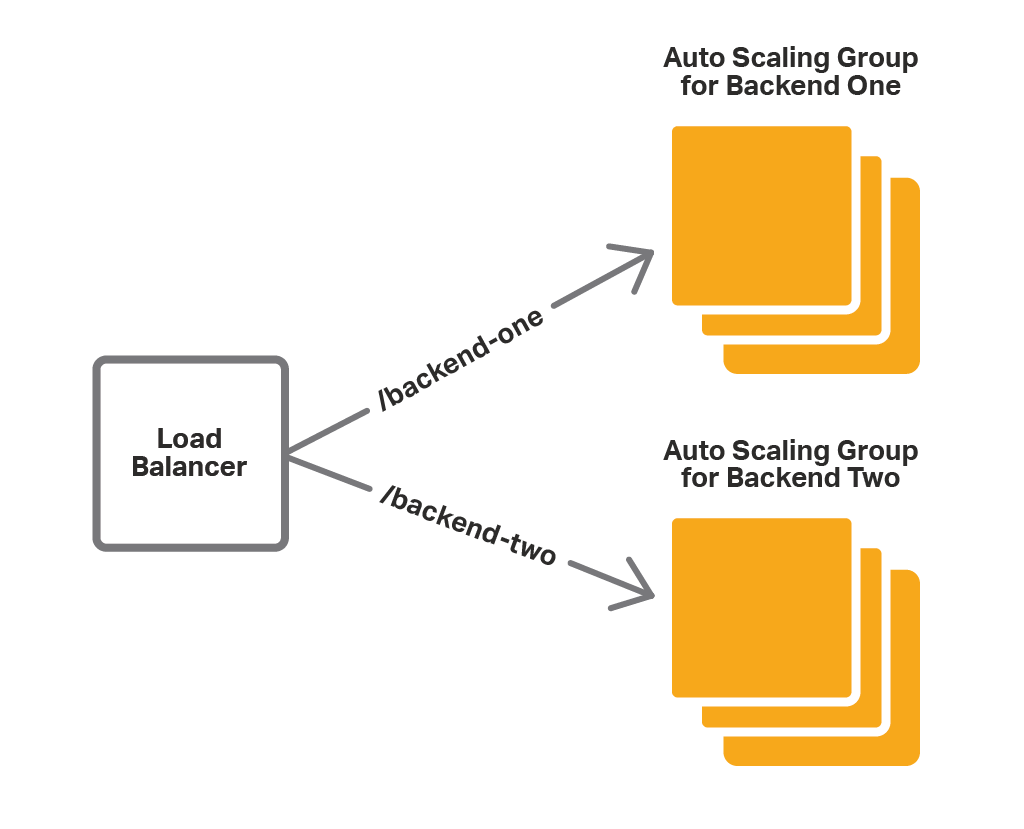

6. By using many computers that are arranged together behind a load balancer, so that they perform or are seen as one large web server.

7. By combining more hardware devices to each computer. This improves the memory part, both primary and secondary. And also by tuning the OS parameters for hardware capacities and usage.

7. By combining more hardware devices to each computer. This improves the memory part, both primary and secondary. And also by tuning the OS parameters for hardware capacities and usage.

9. By adapting more efficient computer programs for web servers. It is mandatory to revise the existing technology that you use for your organization, so as to make it run smoothly with fewer difficulties.

10. By practicing other workarounds, particularly if dynamic content is included. The dynamic contents take more time as compared to static page contents because they change based on the user’s requirements, geographic location, and get displayed accordingly.

The above practices may be held true for one condition and might not work for another case. This implies that the preventive methods are not hard core measures to combat. The trial and error method is the key to actually know what suits your server’s needs.