How to resolve ‘too many requests’ error from the server?

- March 22, 2018

- 0

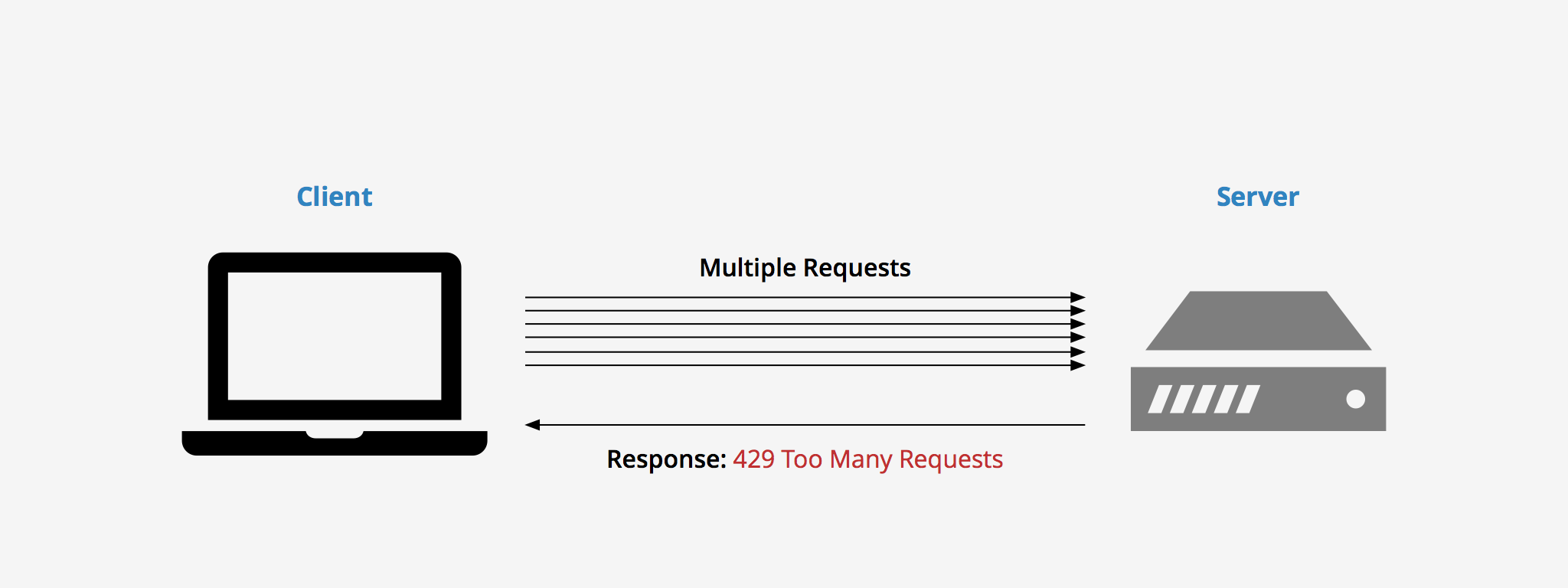

When a user tries to perform too many requests in a particular time-frame, A 429 ‘Too Many Requests’ error befalls. This error is triggered based on the certain rate-limiting settings that a service provider’s servers put in place. Rate limiting is done in accordance to limit the number of requests a user is ready to perform to assist reduce the hazard of their server’s remaining overloaded.

How To resolve a ‘too many requests’ Error 429?

Actually, there is no way to fix it but we can resolve the issue by taking some preventive methods after checking the capacity of the server and how the issue happened in real. As stated earlier, a 429 error is because of the too many requests being made within a time-frame. The server is hence not prepared to treat all of the requests being made at a time. Therefore, its necessary to know what rate-limiting scheme can do to avoid getting a 429 error.

A 429 Too Many Requests response should also add features concerning the situation of the rate-limiting scheme in place. The server may also reverse a Retry-After header which shows how long the user should wait before executing another request. An example of 429 status response may look similar to the following:

HTTP/2 429 Too Many Requests

Content-Type: text/html

Retry-After: 600

<html>

<head>

<title>Too Many Requests</title>

</head>

<body>

<h1>Too Many Requests</h1>

<p>Only 50 requests per hour are allowed on this site per

logged in user. Better luck next time!

</p>

</body>

</html>

Although yielding a 429 status code error is beneficial as it lets the user know that they have reached a limit in the number of allowable requests, servers are not required to return this status code. For instance, if a server is under attack it would need the use of important resources in order to return a 429 error to each request. Hence, in such a case it may be more advantageous to simply drop links or use another form of support which is less resource intense.

Although yielding a 429 status code error is beneficial as it lets the user know that they have reached a limit in the number of allowable requests, servers are not required to return this status code. For instance, if a server is under attack it would need the use of important resources in order to return a 429 error to each request. Hence, in such a case it may be more advantageous to simply drop links or use another form of support which is less resource intense.

Rate limiting is used to check the amount of incoming and outgoing traffic to and fro a network. For example, let’s say you are using a distinct service’s API that is set to let 100 requests/minute. If the amount of requests you make exceeds that limit, then an error will be activated. The logic behind performing rate limits is to give for a better flow of data and to improve safety by reducing attacks like DDoS.

Rate limiting too appears beneficial if a user on the network does a mistake in their request, thus asking the server to reclaim tons of data that may load the network for everyone. With rate limiting in place, however, these types of errors or attacks are much easier.

Methods of rate limiting used to Resolve ‘too many requests’

Two rate-limiting methods

-

One method is Rate limiting per user agent to preserve from bots and crawlers that are wearing out server resources, and

-

Rate limiting per IP to protect from hackers, SEO analyzers and testing devices.

These two rate limiting methods both are determined using a rate limit zone. A zone is an allotment in memory where servers reserve its connection data to check if a user agent or IP has to be rate limited.

Find out which rate limiting method applies

When an IP address or user agent is barred, an error log entry is written to, for instance, in Nginx error logfile /var/log/nginx/error.log considering the zone to which the limit applies.

To find out which rate limiting method is working, find the similar log entries in the error logging and check based on which zone the request is rate limited. A log entry where rate limit is implemented to the user agent and requests per second based on the bots zone.

Rate limiting against bots and crawlers

Every day, your website may be visited by many different bots. Many have a negative impact on your site, especially if they don’t follow your robots.txt. While some like Google, is important. To preserve against adverse performance consequences by trespassing bots, it uses an advanced rate limiting mechanism. This delays the hit rate for useless bots, giving more performance for the bots.

Denying with 429 Too Many Requests

As our aim is not to prevent bots but to rate limit them correctly. We have to be cautious about the rejecting method we use. So, the best way to deny them is with the 429 Too Many Requests message. This allows the bot to understand that the site is there, but the server is not available for the time being. This is a brief event, so may try later. This won’t affect the ranking negatively in any search engine, as the site is there when the bot connects.

Configuring the bot rate limiter

Typically, good bots are excluded from rate limiting, for instance, Google, Bing and several monitoring systems. The bad bots get limited to 1 request per second but good bots never get rate limited. If you want, you may override the system-wide configuration.

For instance, place the following in a config file /data/web/nginx/http.ratelimit:

map $http_user_agent $limit_bots {

default ”;

~*(google|bing|pingdom|monitis.com|Zend_Http_Client|SendCloud|magereport.com) ”;

~*(http|crawler|spider|bot|search|Wget/|Python-urllib|PHPCrawl|bGenius) ‘bot’;}

First, the bots with ‘google’, ‘bing’, etc in their user_agent are marked as neutral, and then the generic bots with a crawler, spider, bot, and more are placed into the group ‘bot’. The keywords that they are paired with are parted with ‘|characters.

Whitelisting more bots

To enlarge the whitelist, we have to discover what user agent has to be added. Check which bots are getting blocked by using the log files and which user agent identification it uses. Say for example, the bot we want to add has the User-Agent Snowflake Crawler 3.1.4. It contains the word ‘crawler’, so it matches the second expression and is identified as a bot. Since the whitelist line controls the blacklist line, the best way to enable this bot is to add their user agent to the whitelist, instead of removing ‘crawler’ from the blacklist:

map $http_user_agent $limit_bots {

default ”;

~*(google|bing|pingdom|monitis.com|Zend_Http_Client|magereport.com|specialsnowflakecrawler) ”;

~*(http|crawler|spider|bot|search|Wget/|Python-urllib|PHPCrawl|bGenius) ‘bot’;

}

Also, you can combine the complete user agent ‘regex’, it’s usually sufficient to limit it to just an identifying section, as shown above. Because the entire string is estimated as a Regular Expression, care must be taken while characters other than an alphabet or numeral.

Rate limiting per IP address

To block a particular IP from handling all the FPM workers available at the same time, leaving no workers available for other visitors, we shall implement a per IP rate limit mechanism. This mechanism produces a maximum amount of PHP-FPM workers that can be used by one IP to 20. This way that IP cannot consume all the available FPM workers, showing an error page or a non-responding site.

The user, however, gets the option of manually excluding IP addresses from the per IP rate limit. This way we can easily whitelist most of the IPs without completely disabling the rate limit.

Eliminate known IPs from the per IP rate limiting mechanism

If you want to prohibit IPs from the “per IP rate” limit, create a file /data/web/nginx/http.conn_ratelimit with the following contents:

map $remote_addr $conn_limit_map {

default $remote_addr;

1.2.3.4 ”;

}

To exclude an IP (I.E. 1.2.3.4), add the IP to the mapping and set an empty value. This will remove the IP from the rate limit.

Turn off per IP rate limiting

When site performance is very weak, it can happen all FPM operators are active just by following regular traffic. Managing a request takes more time, that all workers are continuously wasted by a few visitors. Turning off the rate limit will not fix this trouble but will only change the error message from a “Too many requests” error to a timeout error.

For fixing purpose, however, it could be useful to turn off the per IP connection limit for all IP’s.

With the next snippet in /data/web/nginx/http.conn_ratelimit it is likely to simply turn off IP based rate limiting:

map $remote_addr $conn_limit_map {

default ”;

}

Custom static error page to rate-limited IP addresses

It is feasible to serve a custom error page to IPs that are most likely to get rate limited. To do this, make a static HTML with a custom error page and a file called /data/web/nginx/server.custom_429 with the content below:

error_page 429 /ratelimited.html;

location = /ratelimited.html {

root /data/web/public;

internal;

}

To comprehend, if any user receives a 429 Too Many Requests error, they may have to reduce the number of requests made to the server during a prescribed time-frame. Or you may find services that give different plans in place that permit you to increase your request limit based on your needs.