What is server latency and why is it important?

- April 9, 2018

- 0

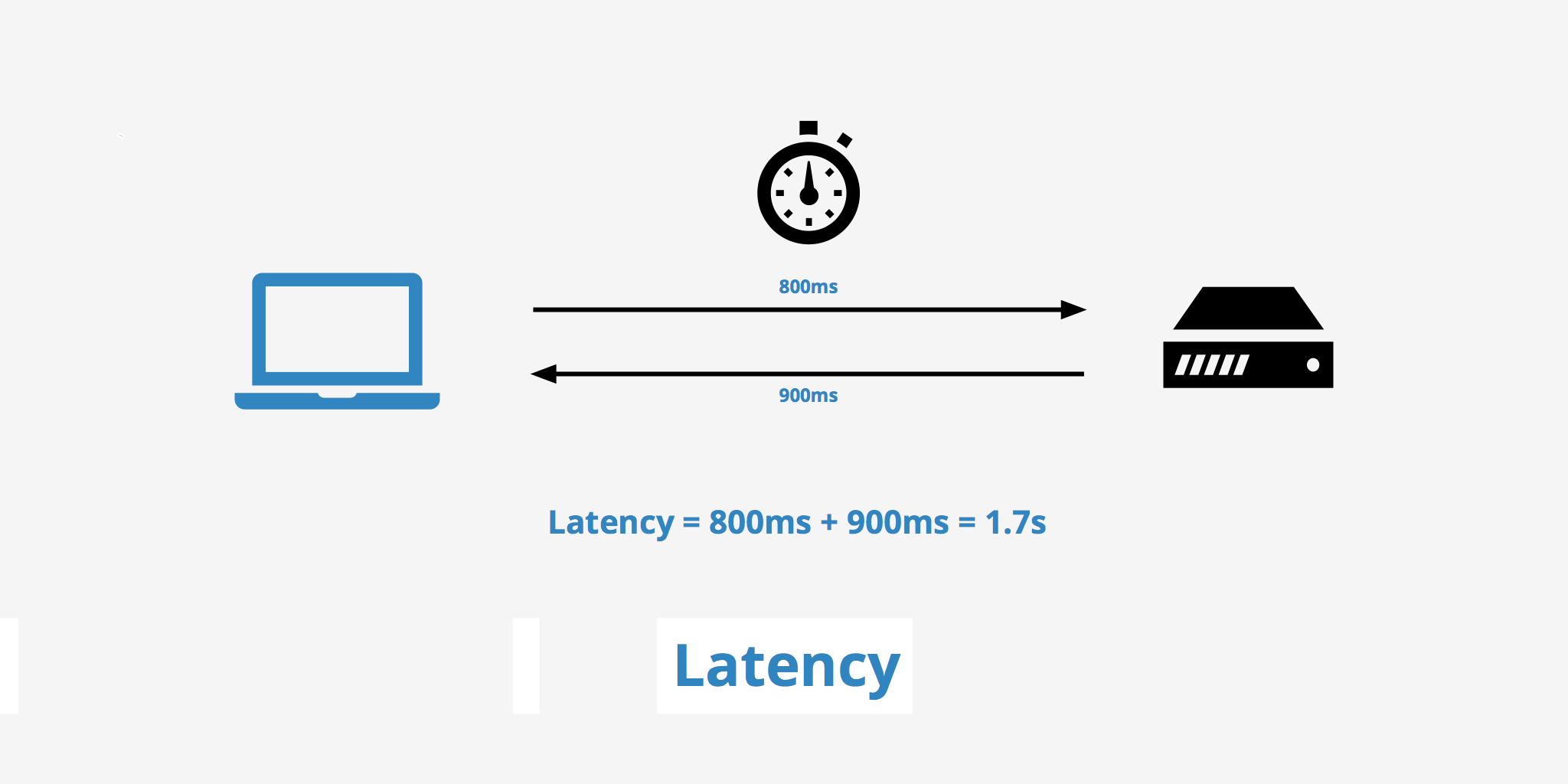

The amount of time it takes for a message to travel across a system is called latency. Latency depends upon the speed of the medium that is transmitted. In terms of web performance, latency can be considered as the time taken for a packet data to travel from one destination to another. In order to receive and process such request, the host server takes a certain amount of time, and this is called server latency. Tackling latency has the top priority when it comes to performance industries, as low latency is the sign of a good network performance. High latency can slow the page down which in turn affects the performance. It is the bandwidth and latency combined that determines the speed of a network.

Causes of server latency

- The distance between the server and the user is a factor to consider when it comes to latency. No matter how close or far the host server is located; there will always be some latency present between the server and the user.

- High Latency occurs when the router or modem is overloaded. When multiple users are active at the same time, latency is bound to happen. Upgrading to a more powerful router or adding an extra router may reduce the issue.

- When there is a high traffic routed between servers and other back-end devices.

- Latency can also be affected depending on the internet bandwidth, although it’s not direct.

- The medium of transmission like optic fiber, wireless connections, etc. also causes latency in a network.

Checking or measuring latency

Server Latency can be measured using several methods, such as mentioned below:

- One such method is the Round Trip Time (RTT). Here Ping rate is calculated using a command tool that sends a user request from the sender and checks the time it took to return to the user device. This method is believed to fetch accurate calculation of latency apart from certain scenarios.

- The second method is the Time to First Byte (TTFB) method. It considers the time used by the browser to start loading a web page soon after the server receives the initial request from the user. The measures of TTFB can be actual TTFB and perceived TTFB.

- Running a speed test can also help calculate latency. This method will help determine the download speed, the upload speed and also gives a report on latency.

- Ping test is also known to check the time required for a packet data to move from your own computer to a server and then back to the computer. This test has the capacity to check multiple servers at the same time, giving a report on overall performance and latency.

Importance of server latency

Online games

Server latency is very crucial when it comes to different areas of computing where instant response is needed. One such area is the online gaming. For online video games, latency is measured in units called ‘ping’. The amount of time taken by the gaming device to send the needed data to the server and to receive it back is called Ping. A low latency is necessary while playing online games for better user experience.

A high latency may create several complications among the players including appearing and disappearing of fellow players. For games like racing, where timing is everything, latency plays a major role. High latency while playing such games causes time lags, thereby making the game less desirable and less enjoyable. Regular online gamers expect less than 50 ms of latency for their gaming sessions to be more fun. Having a fast internet connection may help keep the latency low.

Websites

Server latency is extremely important when it comes to loading web pages, especially commercial websites. Most websites usually have several factors like images, CSS files, etc. To access these objects, the browser has to make several requests to the website’s host servers. If latency is slow for processing this request, the page will automatically slow down and user experience will be compromised.

To summarize, High Latency is an issue present in all internet connections and networks, usually creating user dissatisfaction, thereby affecting productivity. How it affects can vary from one application to another. However, there are several ways of reducing it, including upgrading the computer (hardware and software), reducing server and user distance, and so on. Regular Latency checks can be done to keep it low. The latency of just a few milliseconds can improve or hamper performance.