An Introduction to Apache Sqoop | Everything You Need to Know

- October 25, 2019

- 0

As we already know that the data is increasing at exponential levels, we need to have a consistent approach so that such information is not only saved but could also be of any use, if need be. The Relational Database Management System (RDBMS) is an outdated mechanism of data access and retrieval because it consumes significant amount of time. Practically, the world does not wait for that long because time is proportional to money in businesses! So, having a different approach is the only thing left to go further.

But don’t get us wrong. We can still not eliminate Relational Databases entirely. Just the thing is, we have to allow the data flow smoothly to and from the RDBMS and Hadoop (the one that keeps the Big Data) to extend the functionality such as data manipulation without having to do that to the RDBMS itself.

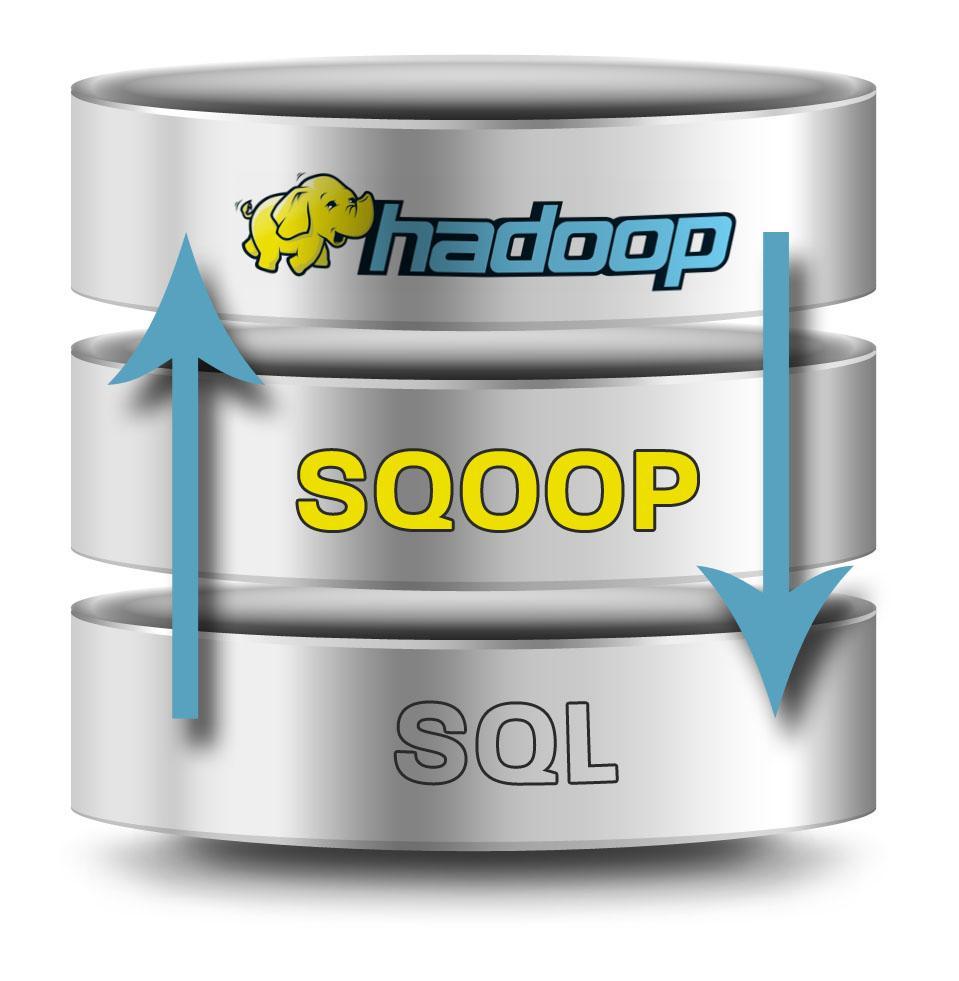

So, What is Apache Sqoop?

Sqoop is a command-line interface application, an abbreviated term for SQL and Hadoop founded by Apache Software Foundation in the year 2009 and had a stable release in 2017.

Apache Sqoop is designed to import and export the large amount of data to and from the relational databases and Apache Hadoop. This import and export can also be done between NoSQL stores and data warehouses. Despite that the task can be done through writing scripts, Sqoop makes it much more speedier and efficient.

Integrating RDBMS and HDFS (Hadoop Distributed File System) is essential so that both structured as well as unstructured data can be manipulated as and when required. Doing that manually is inefficient, costly, and time consuming. Sqoop makes that possible without such issues since it is designed to function like that. As said earlier, Apache Sqoop allows import and export of data, let us learn about these methods in brief.

Data Import

The importing is a two-step process – first, the necessary metadata is gathered for the data that needs to be imported. Next, the actual data is transferred using the metadata.

A row in a database table is a record. The recorded details are saved in the form of text data in text files or binary data as Sequence and Avro files.

The import can also be implemented in Hive. A partition or a table can be created and loaded with Hive while data import is done. If done manually, correct mapping should be done between data and details. The Hive metastore can be populated with metadata in order to let the needed command to load and partition the table.

Data Export

The exporting refers to transmission of data from HDFS to RDBMS. The input of Sqoop file are the records that would be considered as rows in the table. It also consists of two steps. i.e. first examining the database for metadata, and the actual transfer.

Apache Sqoop works on MapReduce framework for both importing and exporting data, thereby offering a parallel fault-tolerance method. At this point, the developers need to understand information like database authentication, source, destination, and so on. The Sqoop data is divided into splits that need to be pushed into the database. This is done through utilization of map tasks.

Apache Sqoop is very straightforward in terms of what covers underneath. The dataset is divided into several partitions where each mapper is responsible for transferring each partition of this dataset. As Sqoop uses database’s metadata to gather type of data, each record is dealt in a type-safe manner.

Sqoop Connectors

Sqoop connectors are for data transmission from an external source of any kind to Hadoop. We also require them to isolate production tables if job failure corruption happens. With the map task the tables are populated and merged with the targeted table to get the data being delivered.

In order to connect to the external systems, one can use specialized connectors that have optimized import and export. This doesn’t support the local JDBC. Instead, there are plugins that can be made use for installation of Apache Sqoop and they are part of its extension framework.

There are countless examples in which the connectors are used for different databases such as M.S. SQL Server, MySQL, IBM’s DB2, and PostgreSQL. These connectors (also include JDBC connector accessed by JDBC) are effective in data transmission at high speeds. There are also companies that develop their own connectors, ranging from enterprise data warehouse to NoSQL datastores.

The real work starts after the data is loaded from relational databases into the form which is supported by HDFS.

Top Benefits of Apache Sqoop

- Ability to let both structured and unstructured transmission of data.

- Parallel data transfer: This lets it done swiftly and cost-effectively.

- Reduced time: A large amount of data needs to be moved back and forth to perform various tasks. Sqoop allows that with optimal speed and the organizations, hence, simply can focus on working on data for actionable insights.

- Decreased cost: Using separate tools to offload activities for moving, extracting, and transforming data is costlier than those done with Hadoop’s resources.

- Return on Investment: Even though there is a vast amount of semi-structured and unstructured data that organizations need to manage, many of them make large investments in relational technologies. Sqoop lets these organizations have benefits on such investments in structured data.

- On-read Schema: Sqoop lets users combine structured, semi-structured as well as unstructured data in a schema on-read environment. This assists data analysis by organizations faster and more effectively.

- Better query functionality: Sqoop facilitates indexing and compression that eventually increases the speed of results of requested queries.

There are cases in which Apache Sqoop won’t be the right kind of solution because it requires sufficient computing resources that every organization cannot fulfill. But don’t worry as there are other alternatives such as Apache Spark SQL. If you have any queries or doubts, or if you think that there are a few more points that could have been discussed in this blog, kindly let us know in the comments section.

Do you wonder why do you have low performing server and how to improve it after finding that out? ApacheBooster plugin is there for your rescue. Having powered by Nginx and Varnish cache, this powerful software won’t let your expectations down once you install it.